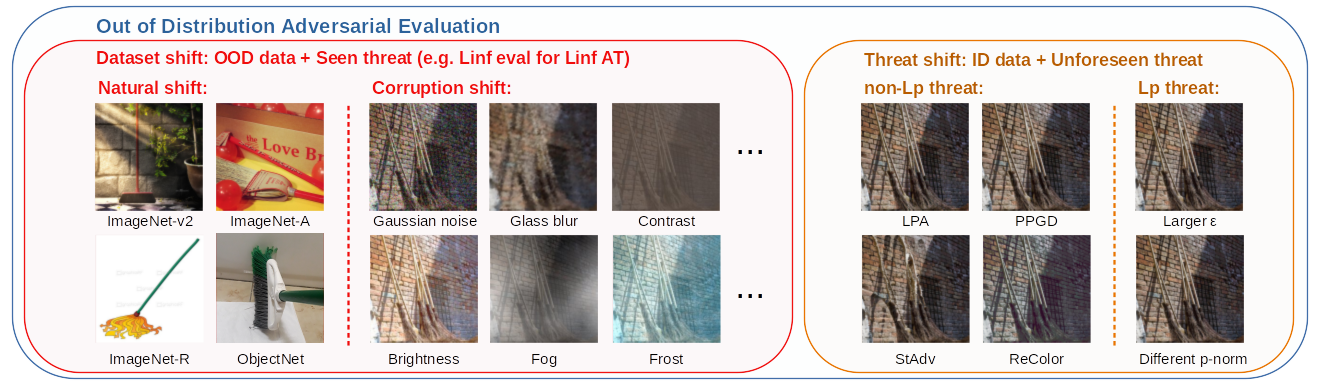

Existing works have made great progress in improving adversarial robustness, but typically test their method only on data from the same distribution as the training data, i.e. in-distribution (ID) testing. As a result, it is unclear how such robustness generalizes under input distribution shifts, i.e. out-of-distribution (OOD) testing. This is a concerning omission as such distribution shifts are unavoidable when methods are deployed in the wild. To address this issue we propose a benchmark named OODRobustBench to comprehensively assess OOD adversarial robustness using 23 dataset-wise shifts (i.e. naturalistic shifts in input distribution) and 6 threat-wise shifts (i.e., unforeseen adversarial threat models).

Leaderboard: CIFAR-10, \( \ell_\infty = 8/255 \), untargeted attack

FAQ

➤ How does the OODRobustBench differ from the

RobustBench? 🤔

Our benchmark focuses on OOD adversarial robustness while RobustBench focuses on ID adversarial robustness. Specifically, our benchmark contrasts RobustBench in the datasets and the attacks. We use CIFAR-10.1, CIFAR-10.2, CINIC, and CIFAR-10-R (ImageNet-V2, ImagetNet-A, ImageNet-R, ObjectNet) to simulate input data distribution shift for the source datasets CIFAR-10 (ImageNet), while RobustBench only uses the latter source datasets. We use PPGD, LPA, ReColor, StAdv, Linf-12/255, L2-0.5 (PPGD, LPA, ReColor, StAdv, Linf-8/255, L2-1) to simulate threat shift for the training threats Linf-8/255 (L2-0.5), while RobustBench only evaluates the same threats as the training ones.

➤Is the linear trend of robustness really expected given the linear trend of accuracy a.k.a. accuracy-on-the-line? 🤔

No. There is a well-known trade-off between accuracy and robustness in the ID setting. We further confirm this fact for the models we evaluate in Figure 13 in our paper. This means that accuracy and robustness usually go in opposite directions making the linear trend we discover in both particularly interesting. Furthermore, the threat shifts as a scenario of OOD are unique to adversarial evaluation and were thus never explored in the previous studies of accuracy trends.

➤ How does our analysis on ID-OOD trends differ from the similar one in RobustBench? 🤔

The scale of analysis on ID-OOD trends in RobustBench is rather small. RobustBench observes linear correlation only for three shifts on CIFAR-10 based on 39 models with either ResNet or WideResNet architectures. In such a narrow setting, it is actually neither surprising to see a linear trend nor reliable for predicting OOD performance. By contrast, our conclusion is derived from much more shifts on CIFAR-10 and ImageNet based on 706 models. Importantly, our model zoo covers a diverse set of architectures, robust training methods, data augmentation techniques, and training set-ups. This makes our conclusion more generalizable and the observed (almost perfect) linear trend much more significant.

Citation

@inproceedings{li2024oodrobustbench, title={OODRobustBench: a Benchmark and Large-Scale Analysis of Adversarial Robustness under Distribution Shift}, author={Lin Li, Yifei Wang, Chawin Sitawarin, Michael Spratling}, booktitle={International Conference on Machine Learning}, year={2024} }

Contribute to OODRobustBench!